Explainer: What’s R1 & Everything Else?

Is AI making you dizzy? A lot of industry insiders are feeling the same. R1 just came out a few

days ago out of nowhere, and then there’s o1 and o3, but no o2. Gosh! It’s hard to know what’s going on. This

post aims to be a guide for recent AI develoments. It’s written for people who feel like they should

know what’s going on, but don’t, because it’s insane out there.

The last few months:

- Sept 12, ‘24: o1-preview launched

- Dec 5, ‘24: o1 (full version) launched, along with o1-pro

- Dec 20, ‘24: o3 announced, saturates ARC-AGI, hailed as “AGI”

- Dec 26, ‘24: DeepSeek V3 launched

- Jan 20, ‘25: DeepSeek R1 launched, matches o1 but open source

- Jan 25, ‘25: Hong Kong University replicates R1 results

- Jan 25, ‘25: Huggingface announces open-r1 to replicate R1, fully open source

Also, for clarity:

- o1, o3 & R1 are reasoning models

- DeepSeek V3 is a LLM, a base model. Reasoning models are fine-tuned from base models.

- ARC-AGI is a benchmark that’s designed to be simple for humans but excruciatingly difficult for AI. In

other words, when AI crushes this benchmark, it’s able to do what humans do.

Let’s break it down.

Reasoning Models != Agents

Reasoning models are able to “think” before respoding. LLMs think by generating tokens. So we’ve training models

to generate a ton of tokens in hopes that they stumble into the right answer. The thing is, it works.

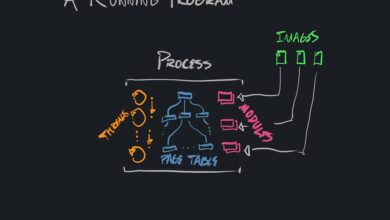

AI Agents are defined by two things:

- Autonomy (agency) to make decisions and complete a task

- Ability to interact with the outside world

LLMs & reasoning models alone only generate tokens and therefore have no ability to do either of these

things. They need software in order to make decisions real and give it interaction abilities.

Agents are a system of AIs. They’re models tied together with software to autonomously interact with the world.

Maybe hardware too.

Reasoning Is Important

Reasoning models get conflated with agents because currently, reasoning is the bottleneck. We need reasoning

to plan tasks, supervise, validate, and generally be smart. We can’t have agents without reasoning, but there

will likely be some new challenge once we saturate reasoning benchmarks.

Reasoning Needs To Be Cheap

Agents will run for hours or days, maybe 24/7. That’s the nature of acting autonomously. As such, costs add up.

As it stands, R1 costs about 30x less than o1 and achieves similar performance.

It’s cheap, open source, and has validated what OpenAI is doing with o1 & o3.

There had been some predictions made

about how o1 works, based on public documentation, and the R1 public paper corroborates all of this almost

entirely. So, we know how o1 is scaling into o3, o4, …

Where do we stand? Are we hurtling upwards? Standing still? What are the drivers of change?

Pretraining Scaling Is Out

When GPT-4 hit, there were these dumb scaling laws. Increase data & compute, and you simply get a better

model (the pretraining scaling laws). These are gone. They’re not dead, per se, but we ran into some

bumps with getting access to data but discovered new scaling laws.

(Continue reading)

Inference Time Scaling Laws

This is about reasoning models, like o1 & R1. The longer they think, the better they perform.

It wasn’t, however, clear how exactly one should perform more computation in order to achieve better

results. The naive assumption was that Chain of Thought (CoT) could work; you just train the model

to do CoT. The trouble with that is finding the fastest path to the answer. Entropix was one idea,

use the model’s internal signals to find the most efficient path. Also things like Monte Carlo Tree Search (MCTS)

, where you generate many paths but only take one. There were several others.

It turns out CoT is best. R1 is just doing simple, single-line chain of thought trained by RL

(maybe entropix was on to something?). Safe to assume o1 is doing the same.

Down-Sized Models (Scaling Laws??)

The first signal was GPT-4-turbo, and then GPT-4o, and the Claude series, and all other LLMs. They were

all getting smaller and cheaper throughout ‘24.

If generating more tokens is your path to reasoning, then lower latency is what you need. Smaller models

compute faster (fewer calculations to make), and thus smaller = smarter.

Reinforcement Learning (Scaling Laws??)

R1 used GRPO (Group Rewards Policy Optimization) to teach the model to do CoT at inference time.

It’s just dumb reinforcement learning (RL) with nothing

complicated. No complicated verifiers, no external LLMs needed. Just RL with basic reward functions for

accuracy & format.

R1-Zero is a version of R1 from DeepSeek that only does GRPO and nothing else.

It’s more accurate than R1, but it hops between various languages like English & Chinese at will, which makes

it sub-optimal for it’s human users (who aren’t typically polyglots).

Why does R1-zero jump between languages? My thought is that different languages express

different kinds of concepts more effectively. e.g. the whole “what’s the german word for [paragraph of text]?” meme.

Today (Jan 25, ‘25), someone demonstrated that any reinforcement learning would work. They tried

GRPO, PPO, and PRIME; they all work just fine. And it turns out that the magic number is

1.5B. If the model is bigger than 1.5B, the inference scaling behavior will spontaneously emerge regardless

of which RL approach you use.

How far will it go?

Model Distilation (Scaling Laws??)

R1 distilled from previous checkpoints of itself.

Distillation is when one teacher model generates training data for a student model. Typically it’s assumed

that the teacher is a bigger model than the student. R1 used previous checkpoints of the same model to generate

training data for Supervised Fine Tuning (SFT). They iterate between SFT & RL to improve the model.

How far can this go?

A long time ago (9 days), there was a prediction that GPT5 exists and that GPT4o is just a distillation of it.

This article theorized that OpenAI and Anthropic have found a cycle to keep creating every greater

models by training big models and then distilling, and then using the distilled model to create a larger model.

I’d say that the R1 paper largely confirms that that’s possible (and thus likely to be what’s happening).

If so, this may continue for a very long time.

Given the current state of things:

- Pre-training is hard (but not dead)

- Inference scaling

- Downsizing models

- RL scaling laws

- Model distilation scaling laws

It seems unlikely that AI is slowing down. One scaling law slowed down and 4 more appeared. This thing is going

to accelerate and continue accelerating for the foreseeable future.

I coined that term, distealing, unauthorized distillation of models.

Software is political now and AI is at the center. AI seems to be factored into just about every political

axis. Most intersting is China vs. USA.

Strategies:

- USA: heavily funded, pour money onto the AI fire as fast as possible

- China: under repressive export controls, pour smarter engineers & researchers into finding cheaper solutions

- Europe: regulate or open source AI, either is fine

There’s been heavy discussion about if DeepSeek distealed R1 from o1. Given the reproductions of R1, I’m finding

it increasingly unlikely that that’s the case. Still, a Chinese lab came out of seemingly nowhere and overtook

OpenAI’s best available model. There’s going to be tension.

Also, AI will soon (if not already) increase in abilities at an exponential rate. The political and geopolitical

implications are absolutely massive.

Yes, it’s a dizzying rate of development. The main takeaway is that R1 provides clarity where OpenAI was

previously opaque. Thus, the future of AI is more clear, and it seems to be accelerating rapidly.